Will Artificial Intelligence find the cure for everything?

Early Attempts (And Grand Failures)

In the beginning, there was a great promise.

In 2018, IBM used its Watson supercomputer (originally announced in 2011) to train an AI system to detect colon cancer. The system was trained on data from the Sloan-Kettering Cancer Center and later validated on Chinese patients. It came up with a correct diagnosis 65.8% of the time, about the same as a lucky gambler on a good day. The main problem was that the US patient data did not teach the system about colon cancer indicators in Chinese patients. In formal terms, this is called overfitting, i.e. the inability to generalize beyond the data the system has seen.

A few years earlier (2015), DeepMind (a subsidiary of Google) and the UK NHS conducted a joint experiment developing the Google Streams app that was supposed to help clinicians diagnose medical issues. The training data was provided by the UK NHS, and used by Google to create and train their app. That experiment was supposed to be using “AI”, however as of 2016, the DeepMind team was quoted saying that it “is not currently using AI, but would use AI in the future”, but that was just the tip of the fiasco. In 2017 the project turned into a data scandal, only to be shut down a few years later (2021), alongside the entire Google Health division.

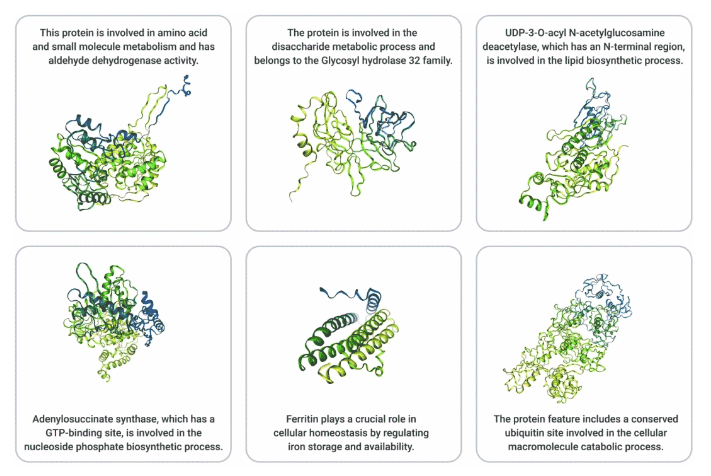

On the other hand, DeepMind developed AlphFold (whose founder Demis Hassabis has recently co-won a Nobel Prize in Chemistry). AlphaFold is an AI system that predicts protein structures from amino acid sequences, solving a key challenge in biology. Its 3D models of proteins accelerate research in drug discovery, disease understanding, and biotechnology.

And you may say: Duh?

The 3D structure of proteins is one of the most important fields of research especially when it comes to drug development and clinical solutions. Remember the mad cow disease?

Mad cow disease in humans, known as variant Creutzfeldt-Jakob disease (vCJD), is caused by misfolded prions from infected beef. These prions enter the human brain and trigger healthy proteins to misfold in the same way, leading to progressive brain damage. In simple terms, you consume proteins of the wrong 3D structure, causing them to flip the 3D structure of your healthy ones in your brain, turning it into mush. I guess this exemplifies the importance of protein 3D structures.

But there’s a catch.

In the realm of drug discovery, researchers try to predict how protein-peptide pairs interact at a molecular level using AlphaFold (peptides are short chains of amino acids linked by peptide bonds). By accurately modeling the 3D structure of both the target protein and the binding peptide, AlphaFold helps identify promising peptide-based drugs that can bind to the protein effectively. This accelerates the design and optimization of drugs.

Once potential therapeutic pairs are identified one may start clinical trials in vitro, followed by experimentation on human subjects, which seems like a great plan to quickly find the cure to just about any disease. It’s not.

The problem with the above approach is that many of the candidate pairs that the AI system designated as potentially therapeutic, either have no effect in the clinical trials and may even prove toxic. The reason behind this is that the protein and its fellow peptide may interact beneficially in a vacuum, however, when placed into the human body, the complex biological environment affects them in ways the AI model could not have foreseen.

And yet, AlphFold is a major breakthrough and does expedite drug development.

Another infamous project was Meta’s Galactica. On November 15 2022 Meta unveiled a new large language model called Galactica, designed to assist scientists. But instead of landing with the big bang Meta hoped for, Galactica died with a whimper after three days of intense criticism, and the company took down the public demo that it had encouraged everyone to try out.

for Intelligent Systems, Germany

The fundamental problem with Galactica was that it was not able to distinguish truth from falsehood, a basic requirement for a language model designed to generate scientific text. People found that it made up fake papers (sometimes attributing them to real authors), and generated wiki articles about the history of bears in space as readily as ones about protein complexes and the speed of light. Galactica also had problematic gaps in what it could handle. When asked to generate text on certain topics, such as “racism” and “AIDS,” the model responded with: “Sorry, your query didn’t pass our content filters. Try again and keep in mind this is a scientific language model.”. All in all, a nice try, but no cigar.

There are numerous other examples of both early (and as late as 2023) experiments that went sour trying to resolve medical and clinical issues, discover new drugs (resulting in the discovery of many new and dangerous nerve agents), and unsuccessfully battle viruses.

But it’s not all thorns. Some new research and commercial projects carry great promise.

Assist vs. Replace

Many popular news outlets often tell us that AI will soon replace “add your favorite profession here”. Some prophesied that doctors are soon to be extinct, replaced by AI-based systems that would be less error-prone, never too tired, and ever empathic. Such proclamations are utter nonsense (I am not going to go into the details of why current AI technology would never replace critical jobs where you are not allowed to be wrong as that would be a highly mathematical and lengthy essay which is out of the scope of this piece).

On the other hand, modern AI systems, and current developments are very helpful in working for, and alongside doctors and other medical practitioners. And entrepreneurs have not let those opportunities go by.

Many current, heavily funded, mid-stage startups look like they could outlive past failures and come up with AI systems that would truly benefit humankind, medical practitioners, and public health.

If you ever visited the ER you may remember how frustrating it is to sit there and wait for the doctor to check you up and solve your immediate problem alongside others just like you. Triage, i.e. the process of testing and assessing your symptoms, and checkups, is long, and cumbersome.

AI.Doc, an Israel-based startup company, tries to optimize and expedite it by developing a triage solution that uses AI to streamline the process of patient intake and prioritization in healthcare settings by assessing and categorizing patients based on the severity of their symptoms and test results (e.g. radiology images, and more). This ensures urgent cases receive prompt attention while less critical cases are efficiently managed.

Another potential breakthrough is developed by Isomorphics Labs (which happens to be a DeepMind spinoff, managed by the same Demis Hassabis). Their pitch sounds familiar, using AlphaFold (version 3) and additional tooling to expedite drug discovery, however, Eli-Lilly, one of the biggest, long-running, and most respected pharmaceutical companies thinks this time it’s going to work and has entered a joint project with Isomorphic Labs to develop therapeutically-potent molecules.

And there’s 310.AI.

310.AI has come up with what they call programmable biology, or MP4 (click it and browse through many molecular examples, it’s fun). MP4 stands for Molecule Programming (version 4 obviously). It’s a way to specify textual prompts as freeform human-readable specifications of a protein which are then processed into a proprietary protein language with a vocabulary that describes functions, properties, processes, families, domains, active sites, motifs, taxonomy, and more.

In layman’s terms, you tell the model what the protein should do, and the model creates it for you. Nothing short of science fiction, however, seems to actually deliver.

But what about the other ailments that are not physically manifested? As you may have guessed by now, that is a rhetorical question.

Mental Health, the New Frontier

It is well known that mental disorders are on the rise. The burden inflicted on humans in modern society is causing anxiety, PTSD and depression. The world is quickly becoming infested with what are somewhat called mild disorders (even though they may take acute life-threatening forms).

Large-scale study based on surveys in 29 nations sheds new light on major health problem. … The researchers said the results demonstrate the high prevalence of mental health disorders worldwide, with 50 percent of the population developing at least one disorder by the age of 75.

The Lancet Psychiatry

Volume 10, Issue 9, September 2023

It’s a large-scale worldwide problem waiting to be resolved (or at least somewhat relieved). And doctors (in this case mental health practitioners, are scarce, very scarce), at the same time it’s a tough nut to crack.

Creating AI based chatbots to double as psychologists is of course the holy grail of mental health AI, however we are not there yet, and cannot afford to unleash current large language models on patients and hope for the best. The risks are simply to high (e.g. a bot by Character.AI, not specifically a mental health chatbot, that allegedly caused a young boy to commit suicide).

Companies like Cass.AI develop AI assistants which, among other uses, help people suffering from anxiety and depression where their main goal in case of anxiety and depression, is “to provide scalable, personalized behavioral health support through AI-powered coaching, digital triage, and connections to crisis counselors”. Basically to be there for you when the human counsel is busy, and help as much as it can (calling the human into the loop if the bot thinks you are in too deep and need a him to take over).

Basically, Cass tries to make mental health care more accessible, and cost-effective, when human help is lacking or unavailable.

Same goes for Woebot Health which offers an AI-powered mental health chatbot that provides emotional support and therapy based on cognitive-behavioral techniques.

Again, it’s an assist, not a replace-ment of the traditional mental health therapies trying to expand the availability of those much needed services while making sure the bots do no harm. And that, is a very tricky business for a good reason.

Analyzing human emotions and behaviour is a multi-modal problem. It spans visual as well as textual and auditory cues. One (i.e. the chat bot) must be able to read the person posture, tone of voice, facial expressions, and even heartbeat variations and many more biological signals. Based on those (which may differ from one person to the other) the bot needs to compose a real-time mental model of the patient’s ever-changing emotional state and triggers’ responses. Only then could it try and respond in a beneficial manner. It’s a tall order even for experienced practitioners, let alone an AI bot, but an important one.

It seems the path to a Star Trek-like computer that analyzes new killer viruses (and comes up with a glow-in-the-dark injection to cure them within the hour), as well as a holographic human-like virtual psychotherapist that counsels ship crew is not yet here, and most probably would require many more breakthroughs beyond current AI technology. But we try.